If you had an NVIDIA-powered system you could pull off that basement remodel without a hitch. . .

By Gary S. Vasilash

If you are, say, redoing your basement, you might think you’ve got everything planned out to the final light fixture but discover along the way that there happens to be something that isn’t going to allow it to happen as anticipated, such as a support pole being in the “wrong” place. (It, of course, is in the right place. Your plans are off.)

You might think that this is something that couldn’t happen during professional projects.

Like when modifying an existing factory to accommodate a new vehicle or to add capacity.

Turns out, factories can be just like basements.

Only the consequences can be greater when it turns out the support beam is the way.

BMW plans to launch more than 40 new or updated vehicles between now and 2027.

It has more than 30 production sites to prepare.

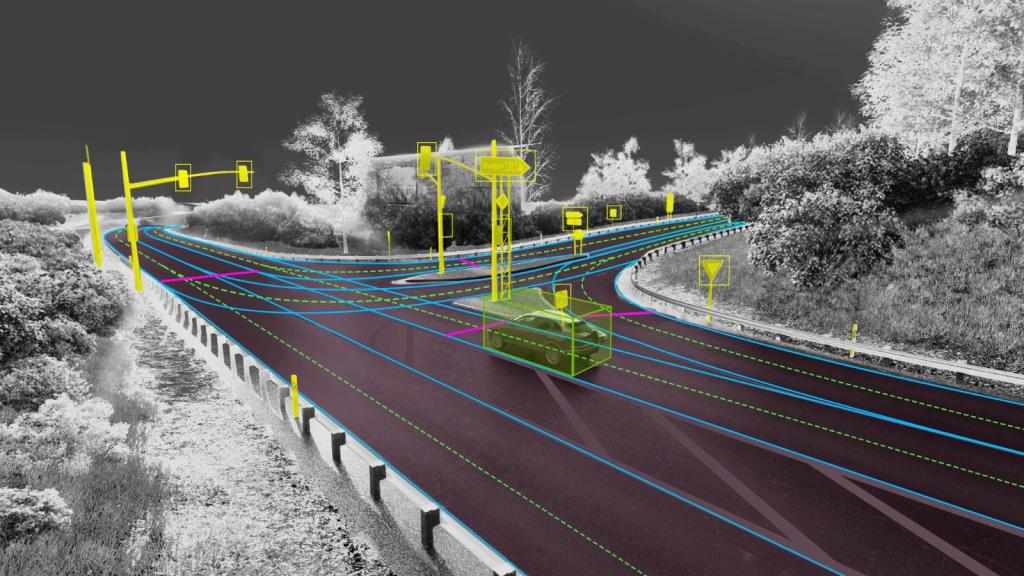

To do this with as minimal a hitch as possible it is using its “Virtual Factory.”

That’s a simulation system that’s based on the NVIDIA Omniverse.

Inputs to the simulation include everything from building data to vehicle metrics, equipment information to manual work operations.

Simulations are run in real time.

Potential collisions (e.g., banging into a column) are automatically determined.

What’s surprising is that pre- this digital twin approach it was sometimes necessary to manually move a vehicle through the plant to make sure everything fit.

And in some cases it was necessary to drain the dip tanks in the paint shop, which is not only time-consuming, but expensive.

And speaking of costs: BMW says the Virtual Factory approach will save as much as 30% in production planning.